New CT Online Ordering Website

J&D Electronics is a South Korean manufacturer that has a new online presence at jdmetering.com. I’ve perused their website and found it pretty easy to use.

Here are a few things that stood out to me:

- They have a 100/5A CT that is very small (24 mm opening), which is unique.

- Their spec sheets are typically very detailed; it’s always nice to have more information rather than not enough.

- They have a very economical Aac to Vdc transducer. See the JSXXX-V and JSXXX-VH series.

- They offer a lot of products and product categories. They seem to be quite the one-stop place to go for anything current transducer or transformer related.

Interestingly enough the website is available in Spanish as well.

Currently they don’t seem to offer very many power meters, but I suppose that could change.

Understanding Current Transformer Accuracy Classes

IEC 61869-2 defines the new current transformer accuracy classes intended to replace the old standard, IEC60044-1 (note that IEC 61869-1 is designed for instrument transformers) . The new and old standards are essentially identical, but IEC 61869-2 consolidated two parts of the older standard:

- IEC 60044-1 : Instrument transformers – Part 1: Current transformers

- IEC 60044-6 : Instrument transformers – Part 6: Requirements for protective current transformers for transient performance

While IEEE/ANSI C57.13 remains active, most current manufacturers prefer the IEC standard because it is not specific to instrument transformers with a 5A output. Therefore, instead of dealing strictly with 5A output devices, the IEC standard is more general, covering devices with a mA output or voltage output.

The next thing to be aware of is that the 0.5 Class (of IEC 61869-2) and the 0.5s Class (of IEC 61869-2) are different. The 0.5s version is a more rigorous standard when it comes to performance on lower current ratios. For example, at 5% of the rated current, the percentage current ratio error for the 0.5 Class is 1.5% whereas 0.5s requires 0.75% or better.

The biggest single mistake I see people make when comparing accuracy of CTs is to assume that 0.5% accuracy = IEC 61869-5 0.5 Class (or some other standard). THIS IS NOT NECESSARILY THE CASE!

I recently tested several CTs from a manufacturer (that I’ll leave unnamed). The website specified the CTs were 0.5% accurate from 10% to 120% of the rated current. I tested the CTs and I found they were correct. However, they were not 0.5 Class! Here’s why:

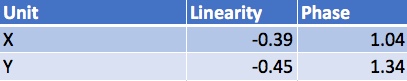

This particular unit, which was rated at 20A, has an error at 20A that is below 0.5%. In addition, I tested the part at 10%, 25%, 50%, 75% and 120% of the rated current, and in each case the linearity was below 0.5%. Therefore, the manufacturer’s claim is true; however, the unit’s phase shift is too large to meet the 0.5 Class. This is because it would have to be less than 30 minutes (0.5 degrees) at 100% of the rated current.

In conclusion, 0.5% accuracy ≠ 0.5 Class ≠ 0.5s Class. Be sure to look at the standard the CT complies with when comparing products. And, if it doesn’t state any standard, be wary!

Purchasing a Current Transformer

When purchasing a current transformer or transducer, the most important considerations are:

- What type of input are you expecting? This may include an AC amperage input, DC amperage input, DC voltage input, etc.

- What type of output is the meter/monitor you are working with going to expect? In the power monitoring industry the most common output required is 333 mVac, but others I’ve seen include 5A, 0-5Vac or 0-2Vac.

True current transformers have the same type of input as they do output. In this sense, current “transformers” that output an AC voltage from an AC amperage input, are not true transformers but rather what is called a current transducer. Anyway, if you get either of these two elements wrong, you’re going to be disappointed when you install and use the current sensor. Other considerations, such as size, style, etc. are secondary.

You can get a wide assortment of current transducers from Aim Dynamics including these options:

- Aac input -> Vac output

- Aac input -> Vdc output

- Aac input -> 4-20 mA output

- Adc input -> Vdc output

- Adc input -> Vac output

- Vac input -> 4-20 mA output

- Vac input -> Vdc output

Back to current transformers, as explained on Aim Dynamics’s website, a current transformer is a device that “transforms” or “steps down” the current input on the “primary winding” to an alternating current of equal proportion on its “secondary winding,” or output. In this way current transformers can convert a potentially dangerous current to one that is more manageable and easier to work with. Because the output current is proportional to the input, it’s ideal for power monitoring, controlling devices, etc. because we can know what the actual current is on the primary conductor by measuring the corresponding current on the secondary output.

True current transformers are passive devices, meaning that they do not require external power. Rather, they use electromagnetic principles to function. More specifically, they typically contain a laminated core of low-loss magnetic material. Next, a wire is wound around the laminated core. The number of windings, or “turns,” is inversely proportional to the current desired on the secondary winding, as expressed by this equation:

(Secondary Current) = (Primary Current) * (Number of turns on the primary conductor / Number of turns on the secondary conductor). We abbreviate this as Is = Ip * (Np/Ns)

In most situations with power monitoring current transformers the number of turns on the primary conductor = 1, that is to say, the conductor is simply passed through the center hole of the transformer, so in this situation we get:

Is = Ip * (1 / Ns), or Is = Ip / Ns.

The most common “true” current transformer used for power monitoring and power controls has a 5 Amp AC current output, but 1 Amp AC currents also exist. Having said this, many current sensors in use today use a large number of windings, resulting in a very low current output. Many industries are preferring this type of output because it’s easier to work with. Instead, what they often do is add a “burden” resistor to the secondary winding to create voltage. Voltage is defined by this equation:

Voltage = Current * Resistance, abbreviated V = I * R

Using this formula, let’s come up with a hypothetical current sensor. Let’s say that we want to produce 333mV when 1000 Amps are “sensed” on the primary conductor, which in our scenario will be a bus bar passing through the center. If the current sensor has 7500 turns, we would expect 1000/7500 Amps, or 133 mA of current if no burden resistor existed. But in our case we want 333 mV of output, so we can divide 333 mV / 133 mA (or .333 V/ .133 A) and we find that the needed burden resistor should be 2.5 Ohms. Once burdened in this way, we can ignore the amperage output (it’s pretty small after all) and consider this a “voltage output” device. Because the current output is alternating current (AC) the output voltage is also alternating, abbreviated Vac.

Current sensors with a 333 mV output don’t have this risk because the current output is extremely low.

Current sensors that change the output type are called current transducers. The hypothetical current sensor described earlier would be most accurately called a current transducer, yet they often simply get called current transformers because they operate using the same basic principles as a current transformer.

BizTalk Adapter 3.0 and Oracle Stored Procedures

13-Sep-2010 Update: After reading this post read Charles’ comment – perhaps there’s just a mix-up on versions (although I haven’t verified this yet).

I was helping advise another coworker on a project where we wanted to use a WCF Oracle receive location to pull data from a database. The poll query looked something like select * from tablename where flag = ‘R’ for update and the post-poll statement did something like update tablename set flag = ‘P’. In order to avoid the possibility that additional ‘R’ records would be written after the select statement but before the update statement (which would cause the also to be updated to ‘P’ without being queried) I had chosen to use a serializable isolation level. However, there was a concern that locking the whole table, even if for a short period, might cause a problem. Instead we decided to use a stored procedure that would return the records for us and ensure that only the records returned would be updated. Sounds simple enough, right?

Well, this is where I was thoroughly disappointed with the development team that worked on the BizTalk Adapter Pack 3.0. I had found Microsoft articles, Microsoft code samples, etc. explaining how to call a stored procedure in a WCF Oracle receive location, but for some reason my coworker couldn’t get any of them to work (here’s an example of one such Microsoft page). I decided to slow down and take a look myself. I was initially confused too, until I came across this page and read “the Oracle Database adapter does not support stored procedures in polling. To address this issue, the Oracle Database adapter enables clients to specify a polling query and a post poll query.” Of course I had to laugh at this point because our reason for wanting to use a stored procedure was because the polling query and post poll statement was too limiting.

Microsoft has done something that they rarely do – they removed functionality in a newer version of the their product. Whereas previously you could call a stored procedure in a WCF Oracle receive location, you no longer can. I couldn’t believe it. Undoubtedly it has something to do with a disagreement between Microsoft and Oracle on something or rather, but it’s still very disappointing.

BizTalk Performance and DNS

We recently solved a performance problem that had been plaguing us for quite some time on one of our Test/QA BizTalk Application servers. Even if you aren’t experiencing the problem to the full extent that we were, I’ll bet I can improve your performance too – want to try? Here’s a brief summary of our problem:

We have two servers in this BizTalk group. I’ll call them Primary and Secondary. Primary is the SSO Server. It was also the slow performing server. We had our system admin folks look into the server to see if maybe we had some failing hardware. They came back and said everything was fine. As the problem continued we started to notice that it really only performed slow when contacting the database. Primary and Secondary are in the same data center, so this seemed a little strange. Since Secondary was performing well, we knew the problem wasn’t the database. We then had the network engineers look into things. I’m not sure exactly what they did but they came back and said that the network was fine.

We ran Microsoft Network Monitor 3.3 and found that Primary was contacting its secondary DNS server for lookups on the database name (which happened to reside in another state, which explains part of the performance problem). To make a long story short, we eventually figured out that the primary DNS server had been entered slightly incorrectly on one of the two servers (Primary) – instead of a 220, there was a 200 somewhere in the IP address.

So what? How can this help you? Well, we found that even when the primary DNS server was corrected, there were still excessive DNS lookups taking place. When we exported all bindings from our BizTalk Group using the Admin console, the operation took 40 seconds after the correction was made (previously it would take minutes). We then added our database name to the hosts file in c:\windows\system32\drivers\etc\, something like this:

123.222.45.162 databasename

123.222.45.162 databasename.full.domain.name

After doing this, the same export command took 20 seconds! So, here’s my question to you (please add a comment): How long does it take for you to export the bindings for all your applications using the Admin Console? If you add a hosts entry for your db server, what kind of performance gain (if any) do you see?

As I thought about this, I think adding the entries to the host file makes a lot of sense. After all, my db server’s IP address is static. I would know about it should it ever need to change. Is there really a point in making DNS lookups every X milliseconds? I don’t think this would be needed if the DNS cache were being used; however, it seems the way the Admin Console was built it is ignoring the DNS cache.

SP1 and the Oracle Adapter in BizTalk Adapter Pack 2.0

I experienced the following problem the other day when trying to configure a WCF-Oracle send port after installing SP1 for BizTalk 2006 R2. I believe the problem would show itself when using BizTalk Adapter Pack 1.0 as well, but I didn’t verify this.

Here’s a text version of the error:

—————————

WCF-Oracle Transport Properties

—————————

Error loading properties.

(System.MissingMethodException) Method not found: ‘System.Configuration.ConfigurationElement Microsoft.BizTalk.Adapter.Wcf.Converters.BindingFactory.CreateBindingConfigurationElement(System.String, System.String)’.

—————————

I know I’m not the first person to encounter this problem because I’ve seen a comment posted elsewhere, but I might be the first person to discuss it a little more in detail. The problem apparently affects not only WCF-Oracle send ports but also WCF-SQL. Viragg is correct in his comment that there was a breaking change done in Microsoft.BizTalk.Adapter.Wcf.Common.dll to the method BindingFactory.CreateBindingConfigurationElement with Sp1. As he mentions, the SP1 version it takes 3 parameters while the pre-SP1 version only takes 2 parameters.

So what should you do? Well, you can get away with just using the WCF-Custom adapter and using the appropriate binding type, e.g. oracleBinding or sqlBinding.

Admittedly, it’s kind of annoying that you have to do this, but it works. Where it would really be a pain is if you were upgrading a production environment and had to do this reconfiguration, which might require your change control process to be used, etc.

The Oracle Adapter in BizTalk Adapter Pack v2.0

Has anyone been successful creating schemas based off of an Oracle package using the new Oracle adapter (BAP v2.o)? We had a Business Adapter Pack v1.0 send port that utilized an Oracle package. When we upgraded to v2.0, it stopped working showing an error like this:

| Event Type: Warning Event Source: BizTalk Server 2006 Event Category: (1) Event ID: 5743 Date: 2/12/2010 Time: 11:57:06 AM User: N/A Computer: YOUR_SERVER Description: The adapter failed to transmit message going to send port “ProcessGSTUpsert.WCF” with URL “oracledb://oracle_server_name/”. It will be retransmitted after the retry interval specified for this Send Port. Details:”Microsoft.ServiceModel.Channels.Common.TargetSystemException: ORA-06550: line 2, column 1: PLS-00306: wrong number or types of arguments in call to ‘UPDATE_PROCEDURE’ ORA-06550: line 2, column 1: PL/SQL: Statement ignored —> Oracle.DataAccess.Client.OracleException ORA-06550: line 2, column 1: PLS-00306: wrong number or types of arguments in call to ‘UPDATE_PROCEDURE’ ORA-06550: line 2, column 1: PL/SQL: Statement ignored at Oracle.DataAccess.Client.OracleException.HandleErrorHelper(Int32 errCode, OracleConnection conn, IntPtr opsErrCtx, OpoSqlValCtx* pOpoSqlValCtx, Object src, String procedure) at Oracle.DataAccess.Client.OracleException.HandleError(Int32 errCode, OracleConnection conn, String procedure, IntPtr opsErrCtx, OpoSqlValCtx* pOpoSqlValCtx, Object src) at Oracle.DataAccess.Client.OracleCommand.ExecuteNonQuery() at Microsoft.Adapters.OracleCommon.OracleCommonUtils.ExecuteNonQuery(OracleCommand command, OracleCommonExecutionHelper executionHelper) — End of inner exception stack trace — <Server stack trace: at System.ServiceModel.AsyncResult.End[TAsyncResult](IAsyncResult result) at System.ServiceModel.Channels.ServiceChannel.SendAsyncResult.End(SendAsyncResult result) at System.ServiceModel.Channels.ServiceChannel.EndCall(String action, Object[] outs, IAsyncResult result) at System.ServiceModel.Channels.ServiceChannel.EndRequest(IAsyncResult result)Exception rethrown at [0]: at System.Runtime.Remoting.Proxies.RealProxy.HandleReturnMessage(IMessage reqMsg, IMessage retMsg) at System.Runtime.Remoting.Proxies.RealProxy.PrivateInvoke(MessageData& msgData, Int32 type) at System.ServiceModel.Channels.IRequestChannel.EndRequest(IAsyncResult result) at Microsoft.BizTalk.Adapter.Wcf.Runtime.WcfClient`2.RequestCallback(IAsyncResult result)”. |

I tried recreating the schema used by the Oracle adapter, but it complains as follows:

| The categories in the ‘Added categories and operations’ list contain no operations for metadata generation. |

This worked before the upgrade. Any ideas?

15-Feb-2010 Update:

See my reply to Antti – the runtime aspect of the problem was figured out. I’ll see if I can find a solution to the schema-generation problem.

BizTalk Server 2006 R2 SP1 – Have you tried it?

As you may know, SP1 for BizTalk 2006 R2 was released about a week ago. Reading the BizTalk Server Team blog, it sounds quite impressive – like an update I would surely want on my servers. However, in troubleshooting with Microsoft a problem I have with performance on one of our servers, the gentleman I worked with recommended I not install it – at least not yet. That puzzled me a bit. He indicated that he had one customer who installed it, and after having a problem, was having trouble reversing (uninstalling) the service pack.

So I thought it’d be interesting to get your opinion on SP1. Have you installed it? Did you have any trouble? Did you notice any of the benefits described by the product team? Let me know how well your experience went.

[Update as of 8-Feb-2010]:

One of the Microsoft Escalation Engineers read my post and wanted me to feel certain that there aren’t any issues with the installation of SP1 for BizTalk 2006 R2. Apparently the only “issue”, if you can call it that, is that the DB schema changes with SP1, so if a rollback was required, it is more complicated because the database needs to be restored to a time point pre-Sp1. I guess I should add that the uninstallation is not supported by Microsoft either. I’d certainly read through the SP1 Installation Guide though before starting the upgrade process. There’s a lot to check before installing.

Because SP1 sounds like it has a lot of improvements I’m going to have SP1 installed on our servers soon – I’ll post an update once that happens (it will take a few weeks to get this into production).

BizTalk Disaster Recovery Planning

I agree with Nick Heppleston’s post entirely in that you had better test your DR plan in advance of the real disaster if you really want it to work! I can speak from experience here – my company’s previous plan sounded perfectly fine to me when I worked on it. In fact, when I discussed the plan with Tim Wieman and Ewan Fairweather they also agreed that it sounded reasonable (to their credit they didn’t get to see a written plan and they both cautioned that I had better test things to be sure). But, as you can probably already guess, when tested, the plan didn’t work. In this post I’ll talk about what I did (that didn’t work) so that you can avoid doing the same thing, and then point out a shortcoming in the MSDN documentation, as I see it.

Here’s what I attempted to do. Since our test/QA environment resides in another data center, far away from our production environment, we decided to use the test environment as our DR environment in the case of a lasting failure in the normal production site. In a few words, we were planning for a scenario where we’d lose both the BizTalk application servers and the SQL Server databases. We thought this was reasonable since many disasters could easily prevent both sets of hardware to stop functioning for an extended period of time.

Here’s a high-level overview of the steps we had in place before the disaster:

- We had configured regular backups with transaction log shipping on the destination (DR) database server.

- We had the IIS web directories and GAC of the BizTalk application servers backed up to the DR BizTalk application servers.

- We had a backup of the master secret available on the DR BizTalk application servers.

Here’s a high-level overview of the steps we had after the disaster:

- Recover the production binaries and production web directories onto the test servers.

- Restore the production database using the DR instance.

- Unconfigure the test BizTalk application servers.

- Run the UpdateDatabase.vbs script and UpdateRegistry.vbs script on the test BizTalk application servers.

- Reconfigure the test BizTalk application servers, joining the SSO and BizTalk Groups.

- Recover Enterprise Single Sign-On.

In early November, we tested the scenario. To make a long story short, it didn’t work. Microsoft, also uncertain why things didn’t work (at first anyway), suggested a “hack” to get things working. That hack would then be reviewed by the product team, which would hopefully provide their final blessing. The hack involved editing the SSOX_GlobalInfo table (part of the SSODB), updating gi_SecretServer with the “new” test server that would become the master secret server. It appeared that it worked at first, but a deeper look into things showed that we were wrong (it only appeared to be working because the real production master secret server had been brought up by that point – since the DR exercise was taking too long – so that both BizTalk groups were running at the same time).

The problem that we faced can be summarized as follows: the MSDN documentation makes an assumption, which wasn’t true in our case. Specifically, Microsoft expects the new master secret server (the test BizTalk app server in our case), to have the same name as the previous master secret server OR for the new master secret server to already be a part of the group prior to the disaster (of course it wouldn’t have been the master secret server prior to the disaster). These limitations don’t really have anything to do with BizTalk, but rather with the Enterprise Single Sign-On product. I think they are unrealistic expectations, but limitations nonetheless.

In conclusion, you had better test out your DR scenario if you haven’t already, or be prepared for some ugly surprises. Please share your experiences with me. What does your DR plan assume? Have you had a nasty experience?

BizTalk Map Bug Causes Inconsistent Results

I found what I would call a bug with the BizTalk Mapper (does anyone dare call it a feature?). Let me show a simple version of the problem I encountered:

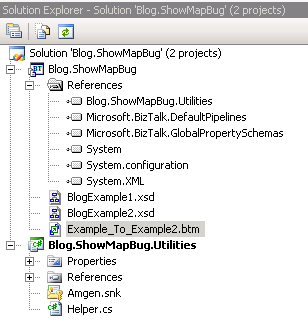

I created a BizTalk project that contains a map that calls an external assembly (in my case part of the same solution), such as:

My external assembly is version 1.0.0.0, compiled in Development mode, and it looks like this:

public class Helper

{

public string ReturnVersion(string ignore)

{

return “1.0.0.0”;

}

}

I deployed the map and the assembly. I tested the map. Sure enough, “1.0.0.0” is mapped to “SomethingElse”. No surprises yet.

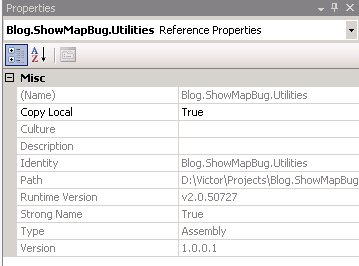

I then changed the ReturnVersion function to return “1.0.0.1”. Similarly, I incremented the version of the external assembly and BizTalk project and compiled in Release mode (let’s pretend I’m done with development and I’m now ready to release). I double checked the references in the Solution Explorer – sure enough Blog.ShowMapBug.Utilities shows the new version as the reference.

I deployed the solution and GAC’d the assembly. I updated my send port to use the new map. I restarted the Host Instance. I then tested things out. What would you expect to get mapped to SomethingElse? 1.0.0.1, right? Well it doesn’t work. 1.0.0.0 still shows up. Why?

If I open the BizTalk map in an XML editor and search for the assembly, I find:

<Script Language=”ExternalAssembly” Assembly=”Blog.ShowMapBug.Utilities, Version=1.0.0.0, Culture=neutral, PublicKeyToken=e767c75a9f3ac928″ Function=”ReturnVersion” AssemblyPath=”..\Blog.ShowMapBug.Utilities\obj\Debug\Blog.ShowMapBug.Utilities.dll” />

So, you’re now thinking what I thought. Okay, just update the Version number there, right? Nope. It turns out that the version number here gets ignored altogether and “1.0.0.0” will continue to get mapped to “SomethingElse” (using the old assembly).

So what’s going on? It turns out that the problem is the AssemblyPath reference. It’s pointing at the “Debug” version of the external assembly. Once deployed, you’d THINK this wouldn’t be a problem, but it is. The only way to fix this problem is to point the DLL at the Release mode version of the file, i.e. AssemblyPath=”..\Blog.ShowMapBug.Utilities\obj\Release\Blog.ShowMapBug.Utilities.dll.

Amazing, isn’t it? Of course in this simple scenario it wasn’t too hard to follow. But of course real maps, real external assemblies and real projects are much more complex, and it took me a few hours to figure out why in the world the project wasn’t behaving as it was expected to.

RSS Feed

RSS Feed